Scam syndicates are going global — and going high-tech.

What used to be street-level hustle is now a billion-dollar operation powered by generative ai and anonymous crypto wallets. As governments across East and Southeast Asia crack down hard on local fraud rings, these criminal groups aren’t retreating — they’re reinventing.

According to a report* from the United Nations Office on Drugs and Crime, organized crime is evolving into a globalized tech industry, with scam centers industrializing their methods and laundering billions across borders. The dark web, AI-powered forgery, and underground banking have all become tools in this new digital crime economy.

And one of the most dangerous tools of all? Deepfake.

How Fraudsters Fool Verification Systems

Deepfake — short for “deep learning + fake” — isn’t just a viral meme generator anymore, but a go-to weapon in the fraudster’s playbook. Powered by artificial intelligence, deepfakes can turn just a few photos into lifelike videos — complete with natural blinking, head tilts, and even micro-expressions. What used to take a visual effects studio can now be done on a laptop. That could be bad news for anyone relying solely on 2D face recognition.

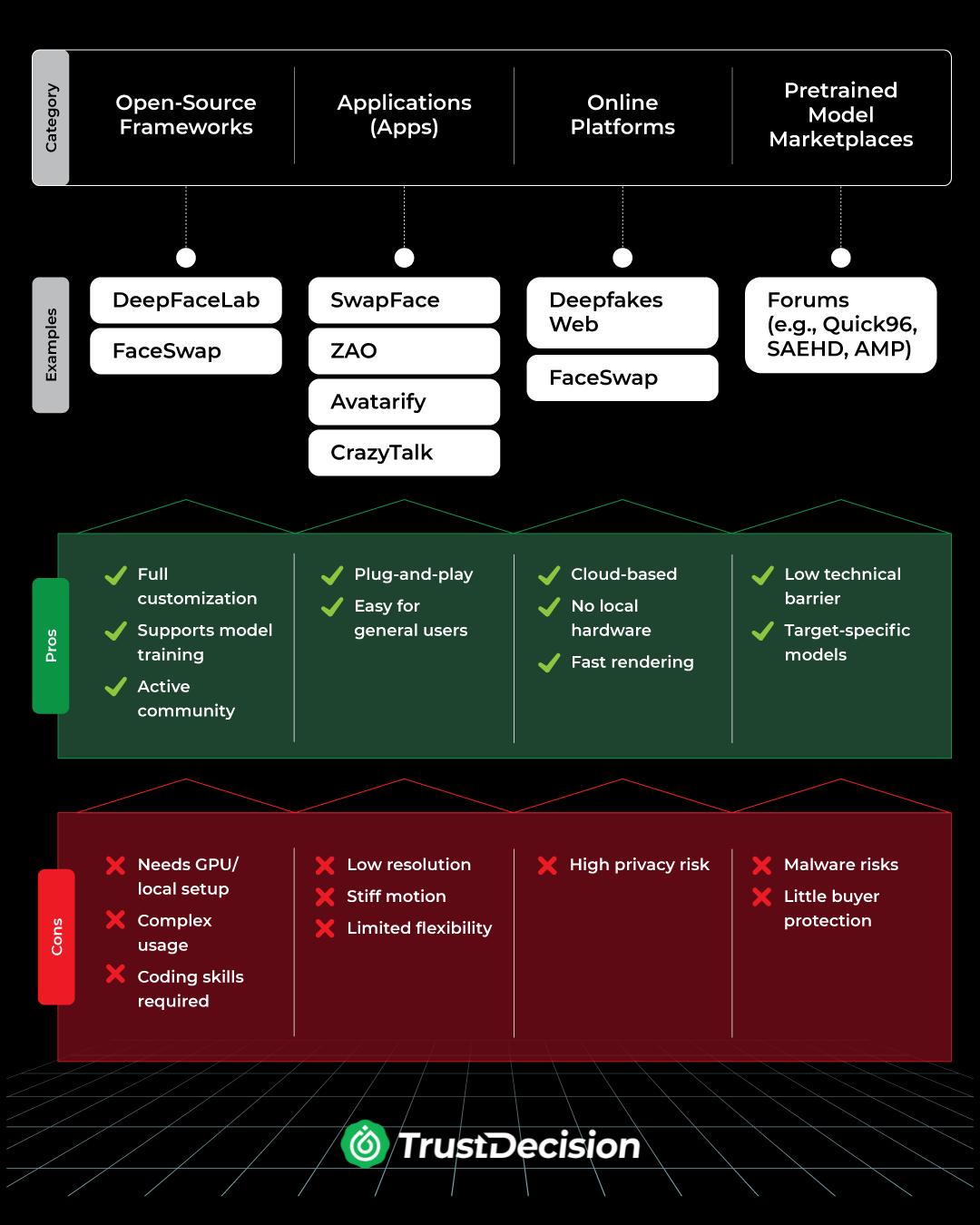

Deepfake Tools: What’s Out There?

From open-source code to plug-and-play apps, fraudsters today have a full toolbox. Here’s a breakdown:

How Deepfakes Are Crafted to Bypass Biometric Verification

Once equipped with the right tools, attackers set their plan in motion. Here's a step-by-step look at how synthetic videos are crafted to bypass facial authentication systems:

- Data Collection – The fraudster identifies a target and uses phishing or social engineering tactics to obtain their biometric data — such as photos, videos, or voice samples.

- Dynamic Modeling – Using the harvested data, the attacker builds a model that replicates the target’s facial features and movements.

- Video Rendering – With that model, they create a realistic animation, often enhanced with special lighting effects (like RGB overlays), to mimic natural blinking rates and head movements — effectively dodging 2D liveness detection.

- Attack Execution – The fake video is then injected into a video call or uploaded into a biometric verification system. Exploiting flaws like frame-based blink detection, the system gets tricked — and the attacker passes off as the real user.

The Enterprise Response to Deepfake Threats

As deepfake attacks grow more sophisticated, it's critical for enterprises to move beyond traditional KYC checks. Leading organizations are now adopting intelligent, multi-layered defenses to identify manipulation earlier and respond faster.

Strengthening Account Protection

By monitoring login behavior, device patterns, and access anomalies, enterprises can detect threats like remote access trojans (RATs) or credential stuffing before identity verification even begins.

Enhancing Biometric Defenses with AI

Modern biometric systems leverage AI to detect subtle inconsistencies in facial dynamics, going far beyond basic blink or motion detection to spot deepfake attempts.

Integrating Device Intelligence

Endpoint signals — such as emulator usage, frequent resets, or hardware mismatches — are increasingly used to uncover spoofed devices and stop fraud at its source.

Modeling Behavior in Context

Synthetic videos can mimic appearances, but not real behavior. By combining behavioral baselines with external intelligence, organizations can build dynamic risk profiles and flag suspicious activity in real time.

Learn more about how deepfake-enabled fraud works, and how to stay ahead in our latest video From Deepfakes to Fake Loans: The New Face of Lending Fraud.

References

United Nations Office on Drugs and Crime. (2025). Inflection point: Global implications of scam centres, underground banking and illicit online marketplaces in Southeast Asia. https://www.unodc.org/roseap/uploads/documents/Publications/2025/Inflection_Point_2025.pdf